DeepMind breaks 50-year math record using AI; new record falls a week later

Aurich Lawson / Getty Photos

Matrix multiplication is at the coronary heart of numerous equipment understanding breakthroughs, and it just acquired faster—twice. Last 7 days, DeepMind announced it learned a far more economical way to conduct matrix multiplication, conquering a 50-year-aged history. This week, two Austrian researchers at Johannes Kepler University Linz declare they have bested that new report by 1 step.

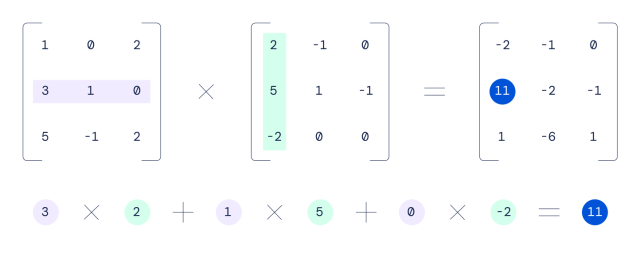

Matrix multiplication, which entails multiplying two rectangular arrays of numbers, is often observed at the heart of speech recognition, graphic recognition, smartphone impression processing, compression, and generating computer graphics. Graphics processing units (GPUs) are notably superior at undertaking matrix multiplication due to their massively parallel character. They can dice a big matrix math trouble into several parts and assault parts of it simultaneously with a distinctive algorithm.

In 1969, a German mathematician named Volker Strassen learned the preceding-very best algorithm for multiplying 4×4 matrices, which lessens the selection of measures important to conduct a matrix calculation. For instance, multiplying two 4×4 matrices jointly employing a classic schoolroom method would acquire 64 multiplications, while Strassen’s algorithm can carry out the identical feat in 49 multiplications.

DeepMind

Working with a neural network known as AlphaTensor, DeepMind identified a way to cut down that count to 47 multiplications, and its scientists printed a paper about the achievement in Character very last week.

Heading from 49 methods to 47 won’t audio like much, but when you take into account how numerous trillions of matrix calculations choose area in a GPU every day, even incremental advancements can translate into significant effectiveness gains, making it possible for AI apps to operate more quickly on present components.

When math is just a activity, AI wins

AlphaTensor is a descendant of AlphaGo (which bested globe-winner Go players in 2017) and AlphaZero, which tackled chess and shogi. DeepMind calls AlphaTensor “the “initial AI procedure for identifying novel, efficient and provably proper algorithms for basic responsibilities such as matrix multiplication.”

To learn much more economical matrix math algorithms, DeepMind established up the problem like a one-participant activity. The business wrote about the approach in additional depth in a weblog article last 7 days:

In this video game, the board is a a few-dimensional tensor (array of quantities), capturing how much from proper the current algorithm is. Via a set of allowed moves, corresponding to algorithm guidance, the player makes an attempt to modify the tensor and zero out its entries. When the participant manages to do so, this effects in a provably suitable matrix multiplication algorithm for any pair of matrices, and its effectiveness is captured by the range of measures taken to zero out the tensor.

DeepMind then qualified AlphaTensor working with reinforcement discovering to engage in this fictional math game—similar to how AlphaGo learned to participate in Go—and it steadily enhanced above time. Finally, it rediscovered Strassen’s operate and these of other human mathematicians, then it surpassed them, in accordance to DeepMind.

In a extra difficult example, AlphaTensor discovered a new way to execute 5×5 matrix multiplication in 96 steps (as opposed to 98 for the older technique). This week, Manuel Kauers and Jakob Moosbauer of Johannes Kepler University in Linz, Austria, published a paper declaring they have lessened that rely by one, down to 95 multiplications. It can be no coincidence that this apparently history-breaking new algorithm came so quickly simply because it built off of DeepMind’s work. In their paper, Kauers and Moosbauer produce, “This option was attained from the scheme of [DeepMind’s researchers] by implementing a sequence of transformations top to a plan from which one particular multiplication could be eliminated.”

Tech progress builds off by itself, and with AI now exploring for new algorithms, it’s probable that other longstanding math data could slide quickly. Identical to how laptop or computer-aided design (CAD) allowed for the improvement of much more complex and more rapidly personal computers, AI could support human engineers speed up its own rollout.